Introduction

The term Infrastructure as Code (IaC) may be seen more frequently nowadays, however, the concept of IaC existed before the advent of cloud computing. IaC refers to the process of managing and delivery infrastructure using code. You write your code to define, deploy, update, and delete infrastructure components (server, database, etc.). Broadly, infrastructure automation via code (IaC) can be classified into these five buckets:

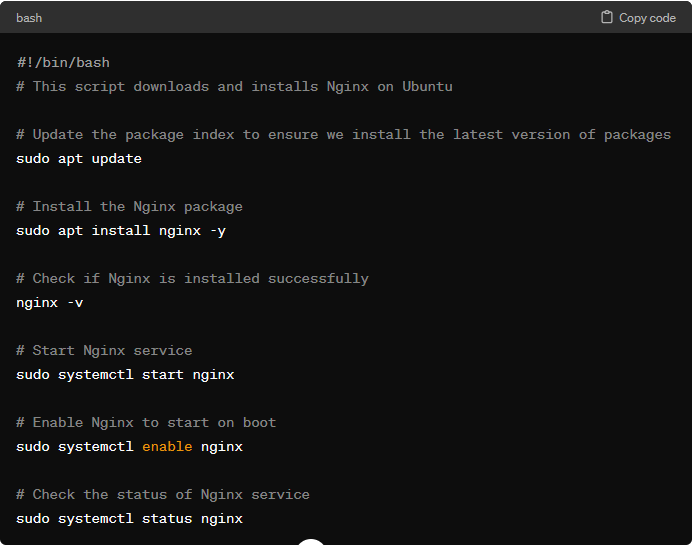

Custom Scripts

This is the classic way of installing, starting up servers using reusable scripts, typically written in languages like Bash, Ruby, or Python. For example, the bash script shown below installs and starts nginx (an open source software for web serving, reverse proxying, caching) on an Ubuntu server. These scripts are run by admins who manage servers across environments and are responsible for installing and configuring the software stack. These scripts were and are flexible, and every team created a rich repository of its own scripts tailored to their environments. The downside is that these scripts do not follow any convention. Mid to large organizations end up with a large set of spaghetti code. This led to the creation of Infrastructure Configuration tools.

Infrastructure Configuration Tools

Chef, Puppet, Ansible, and SaltStack are infrastructure configuration tools designed to automate software installation and management on servers. Unlike custom scripts, these tools follow coding and configuration conventions. For example, Ansible enforces a consistent, predictable structure, clearly named parameters, secrets management and so on. Unlike custom scripts, where each developer follows their own pattern and style, Ansible enforces these conventions so that other developers can easily follow and understand.

Another benefit of Ansible script is its distribution. Bash scripts are meant to be run on single machine, and if it needs to be run on several machines, it needs to be copied over and executed. However, Ansible can execute same across servers in parallel. It can also handle rolling deployment effectively. To achieve similar results in bash scripts would take hundreds of lines of code.

Server Imaging Tools

Server imaging tools (Docker, Vagrant, Packer) act as an abstraction layer over infrastructure configuration tools. They create pre-configured server images, eliminating manual configuration and launching individual servers. These images contain a snapshot of the operating system, the software binaries, configuration files, and other relevant details. You can then use any IaC tool (or commands provided by the same tools) to install these images on servers. They can also be deployed to servers using configuration management tools like Ansible.

There are two broad categories of tools for working with images:

Virtual Machines: A Virtual Machine replicates a computer system, including hardware, via a hypervisor like VMWare or VirtualBox. It virtualizes CPU, memory, storage, and networking. VMs provide isolated environments but demand significant processing power, leading to high CPU and memory usage, and prolonged startup times.

Containers: A container replicates the user space of an OS using a container engine like Docker or CoreOS rkt. It isolates processes, memory, mounts, and networking. While offering similar isolation to VMs, containers share the server’s OS kernel and hardware, reducing security compared to VMs. However, they boot up rapidly and have minimal CPU and memory overhead.

Deployment Management Tools

Server imaging tools are great for creating VMs and containers, but once created, they need to be continuously managed. This includes monitoring the health of the VMs and containers, and automatically replace unhealthy ones (auto heal), scale up and down in response to traffic loads (auto scaling). Handling these management and orchestration tasks falls under the realm of deployment management tools such as Kubernetes, Amazon ECS, Docker Swarm etc. Kubernetes allows us to define how to manage your Docker containers as code.

Infrastructure Definition Tools

Infrastructure configuration, server imaging, and deployment management tools define server code, while infrastructure definition tools like Terraform and CloudFormation create servers. Infrastructure definition tools can create servers, databases, caches, load balancers, configuring aspects of cloud infrastructure including networking, security, and SSL certificates, and more. Compared to the imperative approach of custom bash scripts, definition tools like Terraform are declarative in nature. In the imperative approach, you provide step by step instructions to create infrastructure, and the code has no memory if the infrastructure already exists and only need to be updated or created from scratch. In the declarative approach, the code knows the end state, and these tools can understand the current state, and provision accordingly.

Some of the benefits of infrastructure definition tools like Terraform include:

- Self-service: The rise of DevOps ensures that infrastructure is expressed as code, and continuously delivered (CI/CD). Developers who can read code can also understand the infrastructure code and manage their stack of applications independently.

- Speed and Safety: If the deployment process is automated, it will be significantly faster. The automated process will be consistent, more repeatable, and less prone to manual error.

- Documentation: Instead of the state of the infrastructure being locked away in one or more admin’s head, the state of the infrastructure can be represented by code, anyone can read and understand the desired state of the infrastructure. This will help new hires ramp up on infrastructure with minimal assistance.

- Version Control: Entire history of infrastructure evolution can be seen as commits in the version control systems like Git. It becomes a powerful tool for debugging issues, as often the first step of remediation will be just reverting the recent changes and bring infrastructure to a previously known steady state.

- Validation: If the infrastructure is expressed as code, it can be peer reviewed, put through a set of automation tests, and pass through some static analysis tools. These practices help significantly reduce the chance of defects.

- Reuse: Instead of rebuilding deployments from scratch, package infrastructure as reusable modules for consistent, reliable rollouts across environments.

Cloud Portability: Terraform scripts written for a specific cloud provider, can be converted to other providers with minimal manual efforts. Rapid developments in Generative AI space will help accelerate these conversions as shown here. Nonetheless, depending on the complexity of the architecture, significant manual efforts may still be required to transform Terraform code from a cloud provider to another.

Conclusion

By delving into history, we understand the challenges faced and the solutions devised at each stage, enabling us to grasp the significance of current methodologies. In essence, by studying the evolution of tools in infrastructure management, we glean insights into best practices, technological trends, and emergent paradigms (convention over configuration), enabling us to make informed decisions, anticipate potential directions, and drive innovation in the field of DevOps and cloud computing.

The above post is a concise selection from the highly regarded book “Terraform, Up and Running” authored by Yevgeniy Brikman. If this post interests you, and would like to know more about Terraform and this book, read here: https://www.terraformupandrunning.com.

Written by,

Pradeep Thopae

Director of Delivery